In many ways, Marvel’s Avengers: Endgame was a fitting farewell to the ‘Infinity saga’. We witnessed epic culmination of important story arcs, revisited some incredible moments of MCU’s past, and more importantly, also laid the groundwork for MCU’s future.

Amid all the gamut of emotions we were subjected to, Endgame also treated it’s viewers with complex yet aesthetically pleasing visuals that perfectly captured the gravity of the film.

New Zealand’s visual effects outfit outfit Weta Digital had an instrumental role to play behind the same, delivering as many as 494 VFX shots, ranging from Thanos’ nuanced appearance and Iron Man’s neon-suit, to the destruction of the Avengers compound and the rabble-raising assemblage of the women superheroes of Marvel.

VFX supervisor Matt Aitken takes us through the gruelling process of how it all came about!

What are the changes you made in Thanos’ appearance?

The Thanos we see in Endgame is four years younger than the Thanos of Avengers: Infinity War, arriving at the battlefield from the Guardians of the Galaxy era. This Thanos is at the peak of his physical power and ready for battle.

Compared to Thanos on Titan in Infinity War who was more of a philosopher, meditating on past actions, this Thanos is dressed in his armor and planning to destroy the whole Universe then rebuild it from scratch. As well as creating his armor, we made animation changes to his physical fighting style to reflect his increased strength and agility. We also updated Thanos’ facial rig, building on and improving Weta Digital’s Infinity War Thanos asset. We once again used a digital actor puppet as an intermediary stage, mapping Josh Brolin’s onset performance to this first before transferring it to our digital Thanos puppet.

By doing this, we can confirm that we have captured all the nuances of Josh Brolin’s facial performance before migrating the facial animation from the actor puppet to the Thanos puppet.

For Avengers: Endgame, we further refined the Thanos facial rig to get around some shortcomings that we had experienced on Infinity War but didn’t have the chance to fix. In particular, we added additional controls to the corners of his mouth to achieve greater fidelity and complexity in the performance. We also took advantage of some new tech that our facial animation team had developed which we call ‘deep shapes’.

Deep shapes add another layer of realism to the facial solve without adding any extra overhead to the task of sculpting the set of facial targets. Deep shapes can be thought of as adding another octave of complexity to the transition of the face from one expression to the next, without influencing the shape of the face at either the start or end point of those transitions. This is important because a crucial aspect of our approach to facial animation at Weta Digital is that the facial animator should be able to retain complete control over the shape of the face.

Thanos completely destroys the Avengers Compound towards the end. How did you go about making the complete demolition of the mansion?

The shots of Thanos using his H-Ship to destroy the Avengers Compound – shots that kick off the third-act battle – involved large-scale destruction simulations designed by our fx department, based off timings determined by our animation team. That series of shots are entirely CG, because the compound itself is a CG creation. Animation designed the shots, including the camera motion and timing and placement of the explosions, which procedurally drives the timing and placement of the destruction simulations.

The destruction uses a combination of rigid body simulations for the building destruction, fluid simulations for missiles hitting the river, volumetric simulations for pyrotechnics and smoke and particle simulations for dust and fine-level dirt and debris. The very large-scale explosion that caps off the sequence had to be scaled back, our first version of that blast looked too big for anyone on the ground to have a hope of surviving.

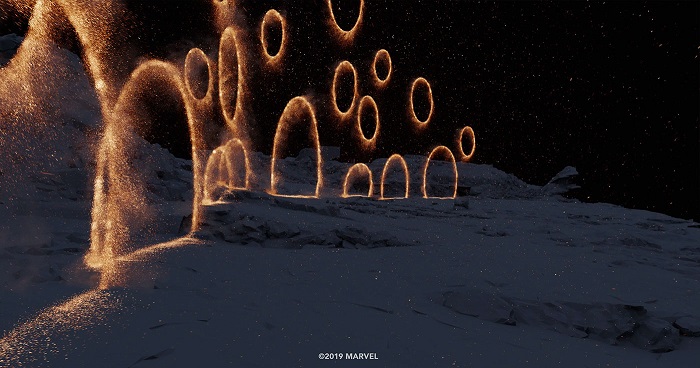

How did you optimise Dr. Strange’s portals so as to accommodate multiple portals in one frame?

For Infinity War, we created the shots where Dr. Strange generates portals during the fight scene on Titan, but those portals were human-scale and usually there was only one shot at any one time. The portals that bring all the heroes to the battlefield in Endgame had to be much bigger to encompass entire armies and multiple portals were visible in one shot.

As well as this, we added further refinements including more accurate modelling of the temperature of the portal sparks, and optically correct motion blur of the sparks. This all required optimisation of the portal simulations to enable us to complete these shots within the movie’s tight post-production schedule.

How did you create environments of Wakanda, Kamar-Taj etc. glimpsing through the portals?

The environments we see through the portals that open up behind Captain America are entirely CG. This was necessary so we could render them with the same camera that was being used on the crater side of the portal, ensuring that their parallax matched that of the ruined compound battlefield as the camera drifted past. Our digital matte painting department created and rendered the environments in a software package called Clarisse, using reference footage provided by the production including key shots from previous movies.

The view of each location: Wakanda, New Asgard, Kamar-Taj, Titan, Contraxia etc was carefully orchestrated for each shot to ensure that the angle on the environment gave an immediately recognisable view of the location through each portal, even though this required cheating the view to be different from shot to shot.

You also worked on Iron Man’s nano-suit. How did you create it? What are the changes made to it in Endgame to that of Infinity War?

Weta Digital has a great tradition of working on Iron Man and his suits that goes back to The Avengers and the scene where Iron Man, Thor and Captain America meet in the forest for the first time and fight. Then for Iron Man 3 we created a huge variety of suits, as Tony has been tinkering. For Infinity War, Tony has created the Mark 50 suit with bleeding edge nanotechnology. For that suit ,we developed the nano-tech look that enables Iron Man to have the suit form different weapons on the fly as the nano particles that make up the suit can flow from one part of Tony’s body to another and take on different shapes.

The bleeding edge weapons that Iron Man generates through the fight on Titan manifest themselves via procedural modelling techniques combining particle and fluid simulations. Each bleeding edge event is made up of three stages: first a liquid metal fluid simulation that allows the suit’s material to move around; this fluid is then crystalised into a particle simulation-based underlying framework or mesh and finally; another particle simulation provides the outer shell of the suit.

For Endgame, Tony’s new Mark 85 suit retained the nanotech capabilities but this time the suit has a more armor-plated look. Iron Man has taken a beating from Thanos in Infinity War and so he has spent the intervening years creating a an even stronger suit in case he ever gets into that situation again. Part of the work involved reworking the material characteristics of the suit so it had more of a solid steel look and less of a sports-car, clear-coat appearance.

In Endgame, we used the nanotech when Tony decides to do the snap. He palms the stones from Thanos using the bleeding edge technology to grab them. He then forms a gauntlet using the bleeding edge technology, with all the terrible energy and power that that unleashes. The suit protects Tony as much as it can but the stone’s power damages the suit. The suit tries to repair itself with bleeding edge tech, but ultimately the suit can’t protect Tony and he suffers terrible injuries as a result. The suit damage and repair action was generated using detailed simulations that went through multiple iterations before we decided that we had settled on the correct balance between showing the damage that the energy of the stones was causing Tony, and not detracting from Robert Downey Jr.’s performance at this crucial turning point in the movie.

Could you tell us more about that epic ‘women of Marvel’ scene?

There’s a shot towards the end of the battle where Spidey hands the gauntlet to Captain Marvel and she is joined by pretty much every woman character from the MCU that kicks off the so-called Women of Marvel beat in the end battle. That was an amazing day to be on set, just to witness the combined acting talent present on the day. The only actor who wasn’t able to be there was Tom Holland playing Peter Parker, so we filmed him separately a few weeks later and integrated him into the shot.

In the action that follows, the Women of Marvel team up to help Captain Marvel get the gauntlet to the van and the quantum tunnel: Scarlet Witch and Valkyrie collaborate to take out Leviathans; Rescue, Shuri and Wasp join forces to send Thanos tumbling. The ideas for the specific action beats in this sequence were still being developed during the block of additional photography and so shooting was limited to general coverage. Marvel eventually approached us in February and asked us to pre-visualise the action based a short text description of how it could play out.

Marvel’s usual previs vendor The Third Floor has wrapped their work on the show and we’re on other projects. So our animation team under Animation Supervisor Sidney Kombo-Kintombo, brainstormed the details of the action and worked it up as rough animation which we edited up and sent through to Marvel. It helped that all but a few of the 25 shots in the sequence were full CG. Marvel’s response to our proposal was very positive, their editor Jeff Ford did a pass on it to tighten it up and then that became our blueprint for finishing the sequence. Because our previs also worked as a first-pass animation, the process of working the shots through to final was a very direct one.

What are the changes you made to the post-snap effect of ‘blip light’?

When Tony snaps his fingers, all of Thanos’ army turn to dust in an effect we call the ‘blip’. We used the blip processes we had developed for the end of Infinity War as a starting point, but the Endgame shots presented new challenges. In Infinity War we were blipping individual characters whereas for Endgame, we had to make a whole army disappear, including tanks, flying vehicles and drop ships. We added a level of detail support to our blip pipeline, using Infinity War’s approach for hero foreground blip events and an optimised version we called ‘blip lite’ for midground events which didn’t need all the fine detail of a hero blip but still gave us a lot of control over the timing of the blip.

For the majority of the background characters and falling vehicles, we developed an even simpler version of the blip that ran out of our compositing software Nuke using a plug-in called Eddy. Eddy is a volumetric simulation and rendering tool that runs within Nuke and it was ideal for the high-volume blip requirements of those shots. Overall timing of all the individual blip events was determined by our animation department.

When Thanos realises he has lost, he resigns himself to the blip. Thanos’ blip is the most sophisticated blip effect we have generated. To create it, we extended the Infinity War techniques to include more fine-level control over the timing of the blip, and more sophisticated interaction between the wind field that takes the blip flakes and dust away, and the parts of Thanos that are blocking the wind field. These colliders had to be updated dynamically frame by frame as Thanos blipped away. As with Infinity War, we made sure the blip flakes and dust were generated from the volume of Thanos’ mass, so it didn’t look like he turned into a hollow shell at the point where he blipped away. I felt it was important that there was a beat at the end of the Thanos blip where the frame was completely clear, so as an audience, we knew he had really gone.

What was the most challenging scene to work on? How did you pull it off?

The whole end battle was hugely challenging to achieve but in particular the fight between Scarlet Witch and Thanos was surprisingly difficult. Many of those shots are entirely or predominantly CG, including a hero Scarlet Witch digital double. But the real challenge was in creating Scarlet Witch’s magic power. Scarlet Witch takes her powers to the next level in Endgame. For Wanda, it is only five minutes since Thanos killed the love of her life, Vision, and so she is seething with anger. Since she first appeared in the MCU, Scarlet Witch has been developing her powers and in Endgame she becomes powerful enough to triumph over Thanos, stripping the armor right off him, until he retaliates by ordering his H-Ship to strafe the battlefield.

Working off reference images provided by Marvel, including key frames of comic book art, we worked up the effect as a combination of volumetric and particle simulations developed by our fx team. The renders of these then went to our compositing department as raw material from which to create the final effect. This went through many iterations before we settled on the final look, which had to be immensely powerful but also recognisable as the same Scarlet Witch magic power that the audience had seen her use in previous MCU films. These iterations took time to turn around and this effect was in development for several months before we felt we had achieved the desired look.

You’ve also worked on fight scenes of grand scales of the Lord of the Rings. How does it compare to that of Endgame in terms of challenges?

It was exciting for those of us working on Avengers: Endgame at Weta Digital who had also worked here on The Lord of the Rings to be back in the world of creating spectacular large-scale battle scenes. A lot of the challenges for Endgame were familiar to us from our work on The Lord of the Rings, including how to deliver on the scale and spectacle while also keep the story grounded in the personal experience of our heroes. As with The Lord of the Rings, we used Massive, the crowd simulation software developed at Weta Digital to create the battle scenes in Rings, to animate the many thousands of warriors in the Endgame final battle.

We felt strongly that we wanted the individual fighting styles of each of the different warrior types: Wakandans, Asgardians, Sorcerers and Ravagers on the hero’s side, and Chitauri, Outriders and Sakharans on Thanos’ side, to be reflected in the background fighting action in the battle. We wanted to present all that visual complexity and not simply have the battle be made up of pairs of warriors standing hacking away at each other with swords. To enable this, we spent several days on the mocap stage with a team of stunt performers recording battle vignettes: 3 Wakandans taking out 3 Chitauri, a horde of Outriders savaging a group of Sorcerers. This material also allowed us to vary the tone of the battle and show that Thanos’ army is dominating at the point where Tony decides to use the stones.

Iron Man looks grievously injured after the snap. What were the challenges in bringing about the effects?

We created the burns on his head and neck as a digital prosthetic. This involved developing a variant of our Tony Stark digital double that had the deeper wounds modelled into the geometry, a revised hair groom where his hair was singed away at his temples, and detailed texture work for the scarring and blood. This CG wound was rendered onto a match-move of the actor’s head and then tracked into place to ensure that the CG picked up all the fine details of the actor’s facial performance. A balance had to be achieved between making sure that the wound looked serious enough to be ultimately fatal to Tony, but also not being so gory that it would distract from Robert Downey Jr’s performance.

The filmmakers were very clear that they wanted Tony to be able to retain his dignity through this sequence. Creating the wound as a digital prosthetic, as opposed to filming the actor with a practical prosthetic, allowed for the level of damage to be explored in the context of the final cut of the sequence. A practical prosthetic would have locked the filmmakers into a certain look which might’ve been too little damage, or too much. We welcomed the opportunity to work with the filmmakers to explore the nuances of Tony’s appearance at this pivotal moment in the movie.

What was the strength of your VFX team (count of people involved)?

Our core crew size on Endgame was around 570 people.

How long did it take to deliver all the shots?

The production team shot most of the material for our sequences in a 5-week block of additional photography in September and October of 2018, at Pinewood Atlanta Studios in Georgia, U.S.A. They started to turn that material over to us soon after the shoot wrapped, and we had most of the show turned over to us by the end of 2018. We were in production on the shots for a period of 16 weeks, delivering our last shot at the end of the first week of April. We finalled 60 percent of our shots in our last two weeks on the show.